In September 2024, the LLM inference acceleration work of the ICT-NLP group was accepted by the NeurIPS 2024 conference. The full name of NeurIPS is Thirty-eighth Conference on Neural Information Processing Systems, which is one of the top conferences in the field of machine learning. NeurIPS 2024 will be held in Vancouver, Canada from December 9th to 15th, 2024.

The brief introduction of the accepted paper is as follows:

- Speculative Decoding with CTC-based Draft Model for LLM Inference Acceleration. (Zhuofan Wen, Shangtong Gui, Yang Feng)

- NeurIPS, poster

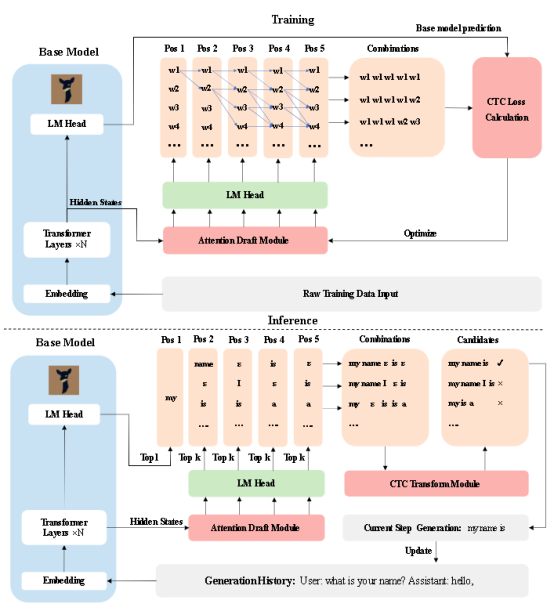

Inference acceleration of large language models (LLMs) has been put forward in many application scenarios and speculative decoding has shown its advantage in addressing inference acceleration. Speculative decoding usually introduces a draft model to assist the base LLM where the draft model produces drafts and the base LLM verifies the draft for acceptance or rejection. In this framework, the final inference speed is decided by the decoding speed of the draft model and the acceptance rate of the draft provided by the draft model. Currently the widely used draft models usually generate draft tokens for the next several positions in a non-autoregressive way without considering the correlations between draft tokens. Therefore, it has a high decoding speed but an unsatisfactory acceptance rate. In this paper, we focus on how to improve the performance of the draft model and aim to accelerate inference via a high acceptance rate. To this end, we propose a CTC-based draft model which strengthens the correlations between draft tokens during the draft phase, thereby generating higher-quality draft candidate sequences. Experiment results show that compared to strong baselines, the proposed method can achieve a higher acceptance rate and hence a faster inference speed.