Congratulations to NLP group for having six papers accepted by EMNLP 2023, including 2 papers by the main conference and 4 paper by Findings of EMNLP! The full name of EMNLP 2023 is the 2023 Conference on Empirical Methods in Natural Language Processing. EMNLP is organized by the ACL special interest group on linguistic data (SIGDAT). It is held once a year and is one of the most influential international conferences in the field of natural language processing. EMNLP 2023 will be held in Singapore from Dec 6 to Dec 10, 2023.

The accepted papers are summarized as follows:

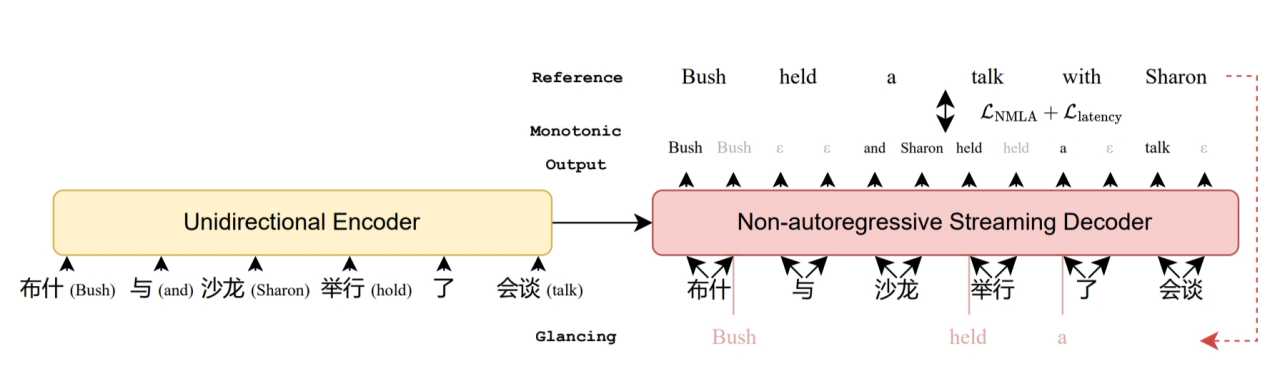

- Non-autoregressive Streaming Transformer for Simultaneous Translation (Zhengrui Ma, Shaolei Zhang, Shoutao Guo, Chenze Shao, Min Zhang, Yang Feng).

- Accepted by Main Conference.

Abstract: Simultaneous machine translation (SiMT) models are trained to strike a balance between latency and translation quality. However, training these models to achieve high quality while maintaining low latency often leads to a tendency for aggressive anticipation. We argue that such issue stems from the autoregressive architecture upon which most existing SiMT models are built. To address those issues, we propose non-autoregressive streaming Transformer (NAST) which comprises a unidirectional encoder and a non-autoregressive decoder with intra-chunk parallelism. We enable NAST to generate blank token or repetitive tokens to adjust its READ/WRITE strategy flexibly, and train it to maximize the non-monotonic latent alignment with an alignment-based latency loss. Experiments on various SiMT benchmarks demonstrate that NAST outperforms previous strong autoregressive SiMT baselines.

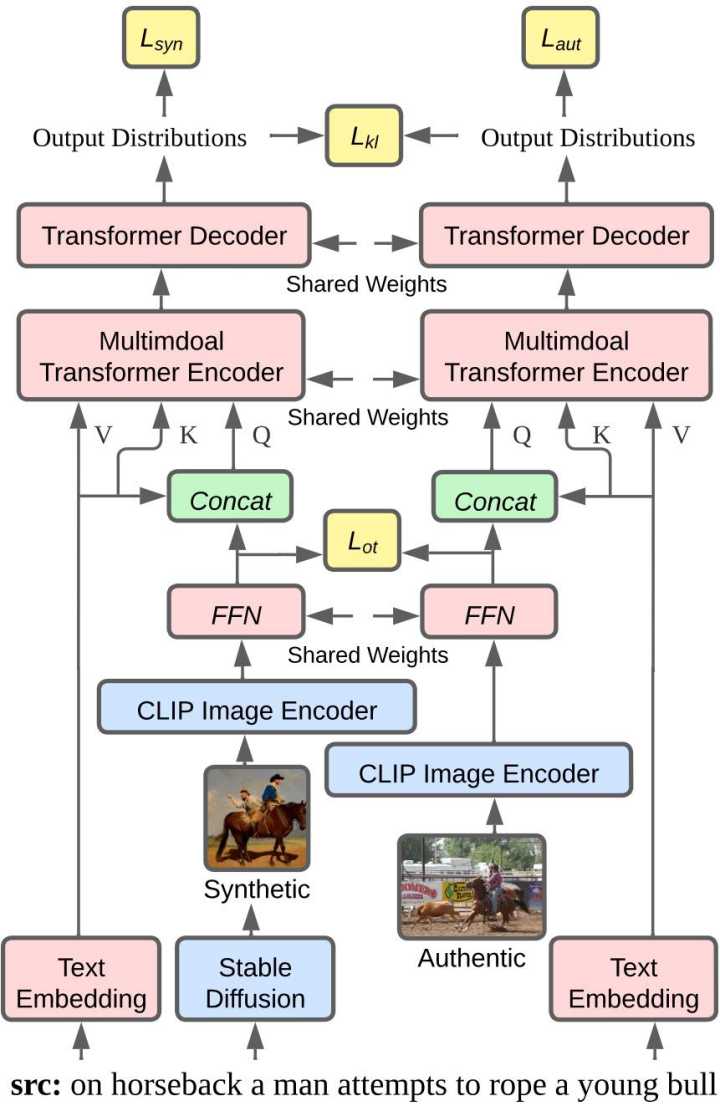

- Bridging the Gap between Synthetic and Authentic Images for Multimodal Machine Translation (Wenyu Guo, Qingkai Fang, Dong Yu, Yang Feng).

- Accepted by Main Conference.

Abstract: Multimodal machine translation (MMT) simultaneously takes the source sentence and a relevant image as input for translation. Since there is no paired image available for the input sentence in most cases, recent studies suggest utilizing powerful text-to-image generation models to provide image inputs. Nevertheless, synthetic images generated by these models often follow different distributions compared to authentic images. Consequently, using authentic images for training and synthetic images for inference can introduce a distribution shift, resulting in performance degradation during inference. To tackle this challenge, in this paper, we feed synthetic and authentic images to the MMT model, respectively. Then we minimize the gap between the synthetic and authentic images by drawing close the input image representations of the Transformer Encoder and the output distributions of the Transformer Decoder. Therefore, we mitigate the distribution disparity introduced by the synthetic images during inference, thereby freeing the authentic images from the inference process. Experimental results show that our approach achieves state-of-the-art performance on the Multi30K En-De and En-Fr datasets, while remaining independent of authentic images during inference.

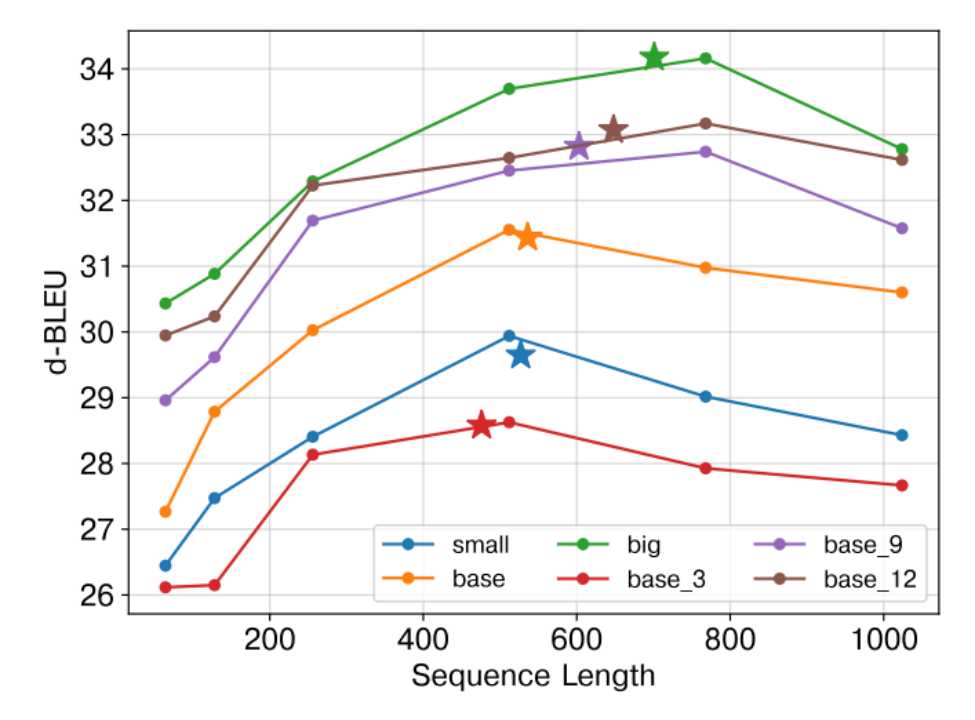

- Scaling Law for Document-Level Neural Machine Translation (Zhang Zhuocheng, Shuhao Gu, Min zhang, Yang Feng).

- Accepted by Findings of EMNLP.

Abstract: The scaling laws of language models have played a significant role in advancing large language models. In order to promote the development of document translation, we systematically examine the scaling laws in this field. In this paper, we carry out an in-depth analysis of the influence of three factors on translation quality: model scale, data scale, and sequence length. Our findings reveal that increasing sequence length effectively enhances model performance when model size is limited. However, sequence length cannot be infinitely extended; it must be suitably aligned with the model scale and corpus volume. Further research shows that providing adequate context can effectively enhance the translation quality of a document's initial portion. Nonetheless, exposure bias remains the primary factor hindering further improvement in translation quality for the latter half of the document.

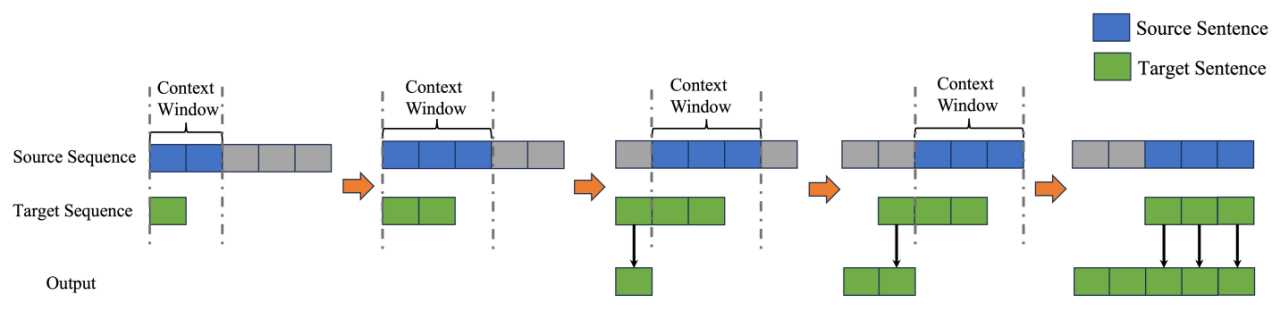

- Addressing the Length Bias Challenge in Document-Level Neural Machine Translation (Zhang Zhuocheng, Shuhao Gu, Min zhang, Yang Feng).

- Accepted by Findings of EMNLP.

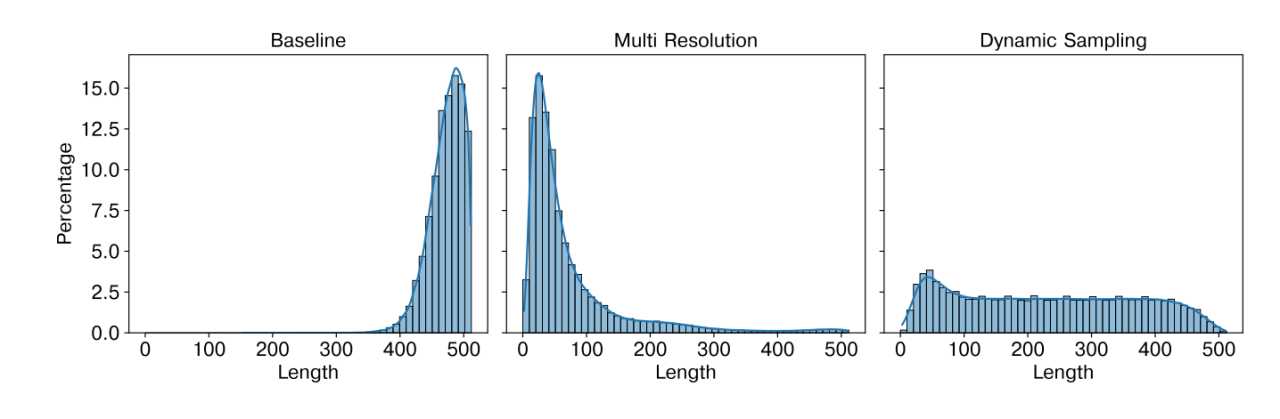

Abstract: Document-level neural machine translation (DNMT) has shown promising results by incorporating context information through increased maximum lengths of source and target sentences. However, this approach also introduces a length bias problem, whereby DNMT suffers from significant translation quality degradation when decoding sentences that are much shorter or longer than the maximum sentence length during training, i.e., the length bias problem. To prevent the model from neglecting shorter sentences, we sample the training data to ensure a more uniform distribution across different sentence lengths while progressively increasing the maximum sentence length during training. Additionally, we introduce a length-normalized attention mechanism to aid the model in focusing on target information, mitigating the issue of attention divergence when processing longer sentences. Furthermore, during the decoding stage of DNMT, we propose a sliding decoding strategy that limits the length of target sentences to not exceed the maximum length encountered during training. The experimental results indicate that our method can achieve state-of-the-art results on several open datasets, and further analysis shows that our method can significantly alleviate the length bias problem.

- Enhancing Neural Machine Translation with Semantic Units (Langlin Huang, Shuhao Gu, Zhuocheng Zhang, Yang Feng*).

- Accepted by Findings of EMNLP.

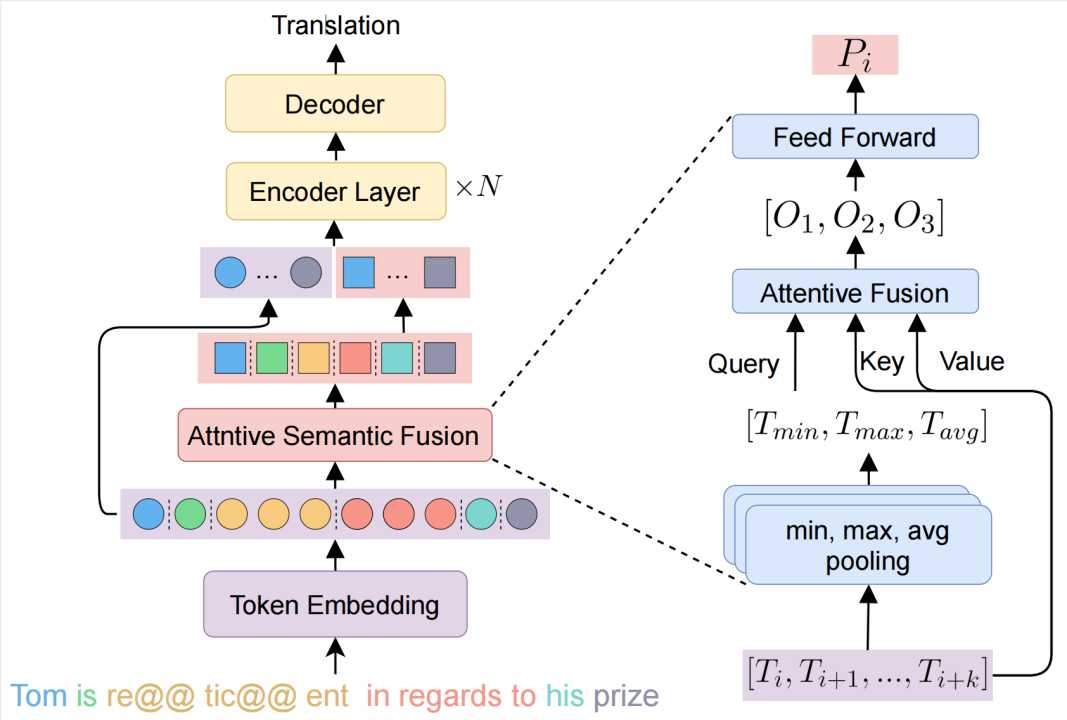

Abstract: Conventional neural machine translation (NMT) models typically use subwords and words as the basic units for model input and comprehension. However, complete words and phrases composed of several tokens are often the fundamental units for expressing semantics, referred to as semantic units. To address this issue, we propose to recover the integral meanings of semantic units within a sentence, which are then leveraged to provide a new perspective for understanding the sentence. Specifically, we first propose Word Pair Encoding (WPE), a phrase extraction method to help identify the boundaries of semantic units. Next, we design an Attentive Semantic Fusion (ASF) layer to integrate the semantics of multiple subwords into a single vector: the semantic unit representation. Lastly, the semantic-unit-level sentence representation is concatenated to the token-level one, and they are combined together as the input to the encoder. Experimental results demonstrate that our method effectively models and leverages semantic-unit-level information and outperforms the strong baselines.

- Simultaneous Machine Translation with Tailored Reference (Shoutao Guo, Shaolei Zhang, Yang Feng).

- Accepted by Findings of EMNLP.

Abstract: Simultaneous machine translation (SiMT) generates translation while reading the whole source sentence. However, existing SiMT models are typically trained using the same reference disregarding the varying amounts of available source information at different latency. Training the model with ground-truth at low latency introduces forced anticipations, whereas utilizing reference consistent with the source word order at high latency results in performance degradation. Consequently, it is crucial to train the SiMT model with the reference that avoids forced anticipations during training while maintaining high quality. In this paper, we propose a novel method that provides tailored reference for the SiMT models trained at different latency by rephrasing the ground-truth. Specifically, we introduce the tailor, induced by reinforcement learning, to modify ground-truth to the tailored reference. The SiMT model is trained with the tailored reference and jointly optimized with the tailor to enhance performance. Importantly, our method is applicable to a wide range of current SiMT approaches. Experiments on three tasks demonstrate that our method achieves state-of-the-art performance in fixed and adaptive policies.