Congratulations to NLP group for having four papers accepted by NeurIPS 2023! The full name of NeurIPS 2023 is the Thirty-seventh Conference on Neural Information Processing Systems, which is one of the top conferences in the field of artificial intelligence. NeurIPS is currently ranked 10th in Google Scholar's ranking of academic conferences/journals. NeurIPS 2023 will be held in New Orleans, LA from Dec 10 to Dec 16, 2023.

The accepted papers are summarized as follows:

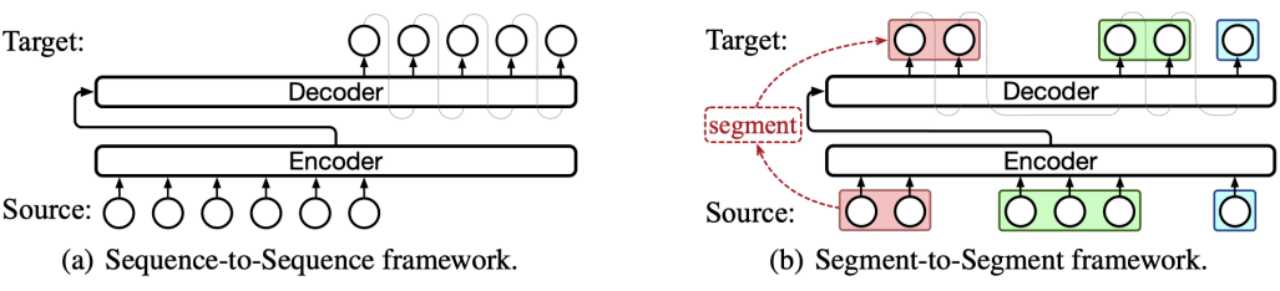

- Unified Segment-to-Segment Framework for Simultaneous Sequence Generation (Shaolei Zhang, Yang Feng) .

- Accepted by NeurIPS 2023. Long Paper.

Abstract: Simultaneous sequence generation is a pivotal task for real-time scenarios, where the target sequence is generated while receiving the source sequence. The crux of achieving high-quality generation with low latency lies in identifying the optimal moments for generating, accomplished by learning a mapping between the source and target sequences. However, existing methods often rely on task-specific heuristics for different sequence types, limiting the model’s capacity to adaptively learn the source-target mapping and hindering the exploration of multi-task learning for various simultaneous tasks. In this paper, we propose a unified segment-to-segment framework (Seg2Seg) for simultaneous sequence generation, which learns the mapping in an adaptive and unified manner. During the process of simultaneous generation, the model alternates between waiting for a source segment and generating a target segment, making the segment serve as the natural bridge between the source and target. To accomplish this, Seg2Seg introduces a latent segment as the pivot between source to target and explore all potential source-target mappings via the proposed expectation training, thereby learning the optimal moments for generating. Experiments on multiple simultaneous generation tasks, including streaming speech recognition, simultaneous machine translation and simultaneous speech translation, demonstrate that Seg2Seg achieves state-of-the-art performance and exhibits better generality across various simultaneous generation tasks.

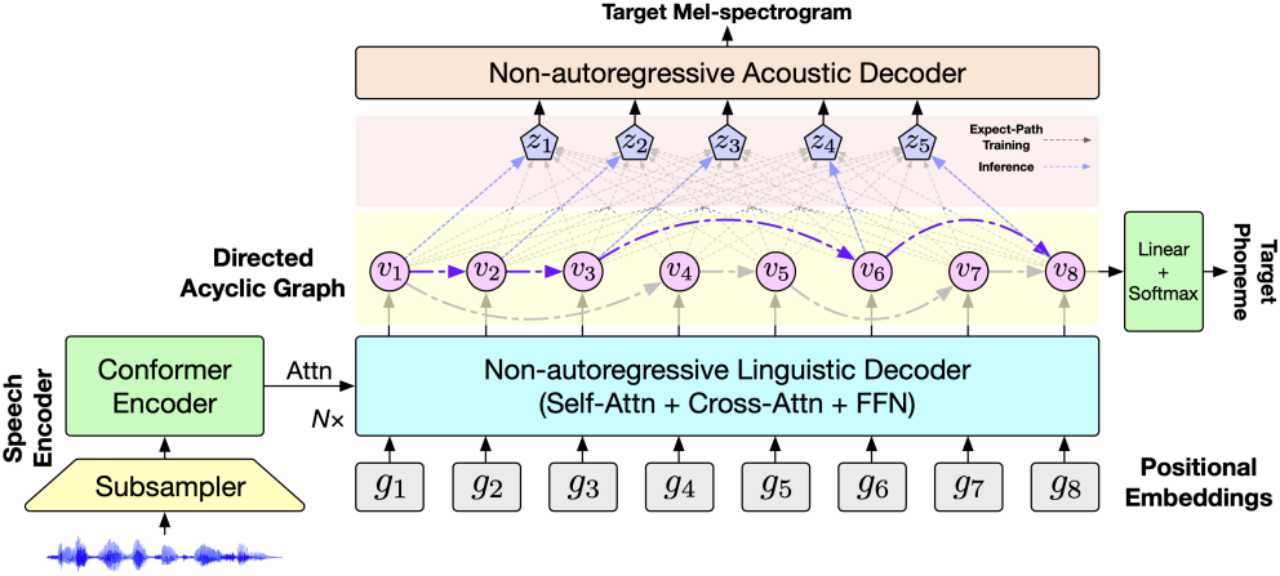

- DASpeech: Directed Acyclic Transformer for Fast and High-quality Speech-to-Speech Translation(Qingkai Fang, Yan Zhou, Yang Feng) .

- Accepted by NeurIPS 2023. Long Paper.

Abstract: Direct speech-to-speech translation (S2ST) directly translates speech of the source language into the target language, which can break the communication barriers between different language groups and has broad application prospects. However, due to the presence of linguistic and acoustic diversity, the target speech follows a complex multimodal distribution, posing challenges to achieving both high-quality translations and fast decoding speeds for S2ST models. In this paper, we propose DASpeech, a non-autoregressive direct S2ST model which realizes both fast and high-quality S2ST. DASpeech adopts the two-pass architecture to decompose the generation process into two steps, where a DA-Transformer model first generates the target text, and a FastSpeech 2 model then generates the target speech based on the hidden states of the DA-Transformer. To consider all potential paths in the DAG during training, we calculate the expected hidden states for each target token via dynamic programming. Experiments on the CVSS benchmark demonstrate that DASpeech can achieve comparable or even better performance than the state-of-the-art S2ST model Translatotron 2, while preserving up to 18.53x speedup compared to the autoregressive baseline model. Compared with the previous non-autoregressive S2ST model, DASpeech does not rely on knowledge distillation and iterative decoding, achieving significant improvements in both translation quality and decoding speed. Furthermore, DASpeech shows the ability to preserve the speaker’s voice of the source speech during translation.

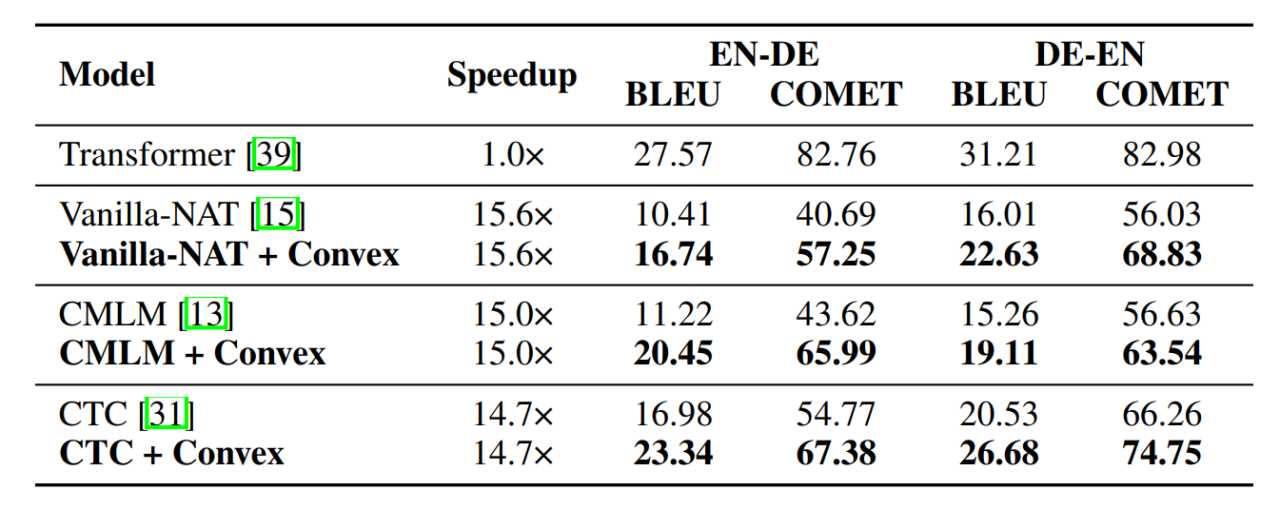

- Beyond MLE: Convex Loss for Text Generation(Chenze Shao*, Zhengrui Ma*, Min Zhang, Yang Feng).

- Accepted by NeurIPS 2023. Long Paper.

Abstract: Maximum likelihood estimation (MLE) is a statistical method used to estimate the parameters of a probability distribution that best explain the observed data. In the context of text generation, MLE is often used to train generative language models, which can then be used to generate new text. However, we argue that MLE is not always necessary and optimal, especially for closed-ended text generation tasks like machine translation. In these tasks, the goal of model is to generate the most appropriate response, which does not necessarily require it to estimate the entire data distribution with MLE. To this end, we propose a novel class of training objectives based on convex functions, which enables text generation models to focus on highly probable outputs without having to estimate the entire data distribution. We investigate the theoretical properties of the optimal predicted distribution when applying convex functions to the loss, demonstrating that convex functions can sharpen the optimal distribution, thereby enabling the model to better capture outputs with high probabilities. Experiments on various text generation tasks and models show the effectiveness of our approach. Specifically, it enables autoregressive models to bridge the gap between greedy and beam search, and facilitates the learning of non-autoregressive models with a maximum improvement of 9+ BLEU points.

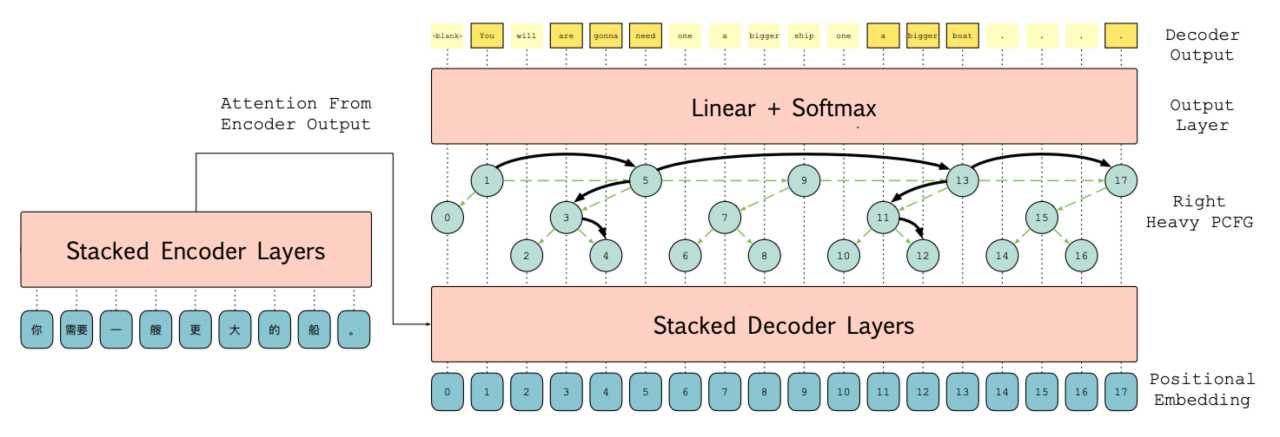

- Non-autoregressive Machine Translation with Probabilistic Context-free Grammar(Shangtong Gui, Chenze Shao, Zhengrui Ma, Xishan Zhang, Yunji Chen, Yang Feng).

- Accepted by NeurIPS 2023. Long Paper.

Abstract: Non-autoregressive Transformer(NAT) significantly accelerates the inference of neural machine translation. However, conventional NAT models suffer from limited-expression power and performance degradation compared to autoregressive (AT) models due to the assumption of conditional independence among target tokens. To address these limitations, we propose a novel approach called PCFG-NAT, which leverages a specially designed Probabilistic Context-Free Grammar (PCFG) to enhance the ability of NAT models to capture complex dependencies among output tokens. Experimental results on major machine translation benchmarks demonstrate that PCFG-NAT further narrows the gap in translation quality between NAT and AT models. Moreover, PCFG-NAT facilitates a deeper understanding of the generated sentences, addressing the lack of satisfactory explainability in neural machine translation.