Congratulations! In October 2022, NLP's eight papers are accepted by EMNLP 2022, including 4 main conference papers and 4 findings papers! The full name of EMNLP 2022 is the Conference on Empirical Methods in Natural Language Processing, organized by SIGDAT, a division of the International Association for Computational Linguistics (ACL), and held annually as one of the most influential international conferences in the field of natural language processing.

The accepted papers are summarized as follows:

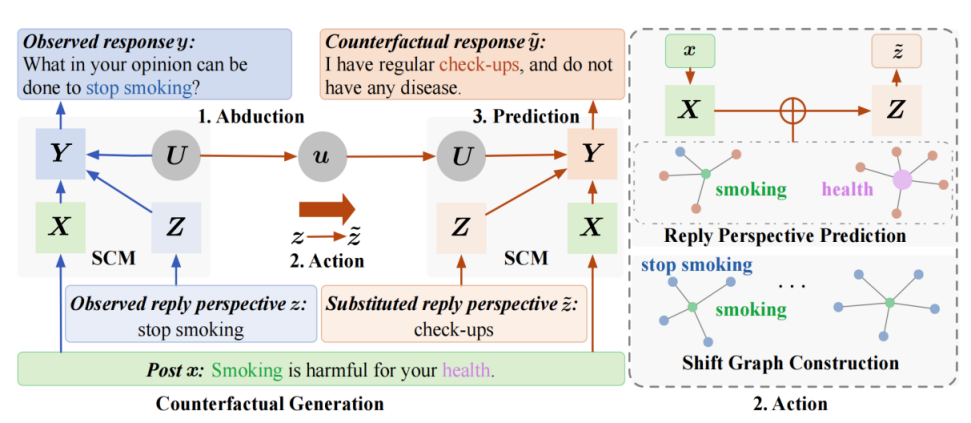

1. Counterfactual Data Augmentation via Perspective Transition for Open-Domain Dialogues (Jiao Ou, Jinchao Zhang, Yang Feng, Jie Zhou).

Accepted by Main Conference.

Abstract: The construction of open-domain dialogue systems requires high-quality dialogue datasets. The dialogue data admits a wide variety of responses for a given dialogue history, especially responses with different semantics. However, collecting high-quality such a dataset in most scenarios is labor-intensive and time- consuming. In this paper, we propose a data augmentation method to automatically augment high-quality responses with different semantics by counterfactual inference. Specifically, given an observed dialogue, our counterfactual generation model first infers semantically different responses by replacing the observed reply perspective with substituted ones. Furthermore, our data selection method filters out detrimental augmented responses. Experimental results show that our data augmentation method can augment high-quality responses with different semantics for a given dialogue history, and can outperform competitive baselines on multiple downstream tasks.

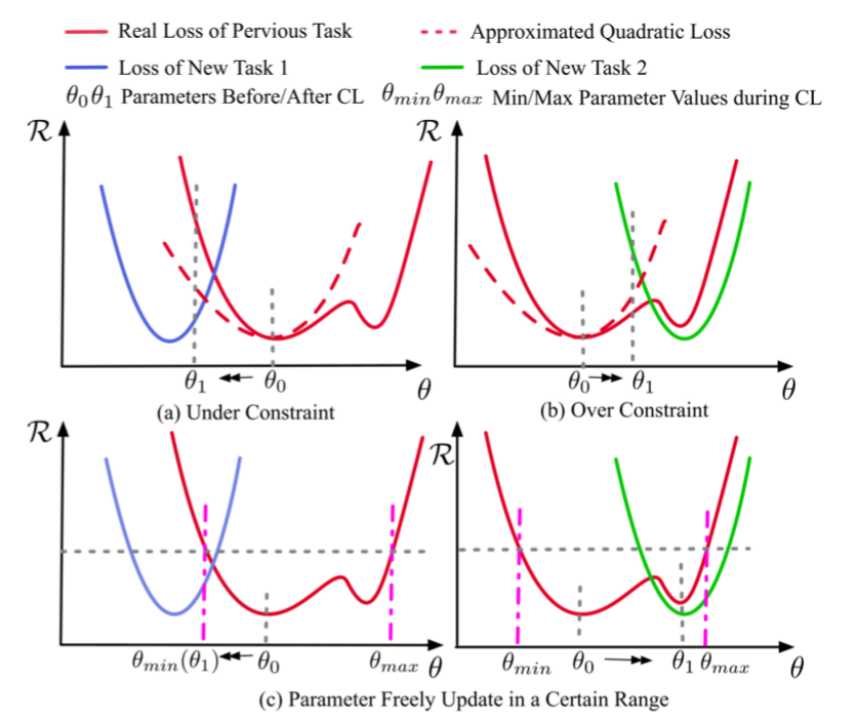

2. Continual Learning of Neural Machine Translation within Low Forgetting Risk Regions (Shuhao Gu, Bojie Hu, Yang Feng).

Accepted by Main Conference.

Abstract: This paper considers continual learning of large-scale pretrained neural machine translation model without accessing the previous training data or introducing model separation. We argue that the widely used regularization-based methods, which perform multi-objective learning with an auxiliary loss, suffer from the misestimate problem and cannot always achieve a good balance between the previous and new tasks. To solve the problem, we propose a two-stage training method based on the local features of the real loss. We first search low forgetting risk regions, where the model can retain the performance on the previous task as the parameters are updated, to avoid the catastrophic forgetting problem. Then we can continually train the model within this region only with the new training data to fit the new task. Specifically, we propose two methods to search the low forgetting risk regions, which are based on the curvature of loss and the impacts of the parameters on the model output, respectively. We conduct experiments on domain adaptation and more challenging language adaptation tasks, and the experimental results show that our method can achieve significant improvements compared with several strong baselines.

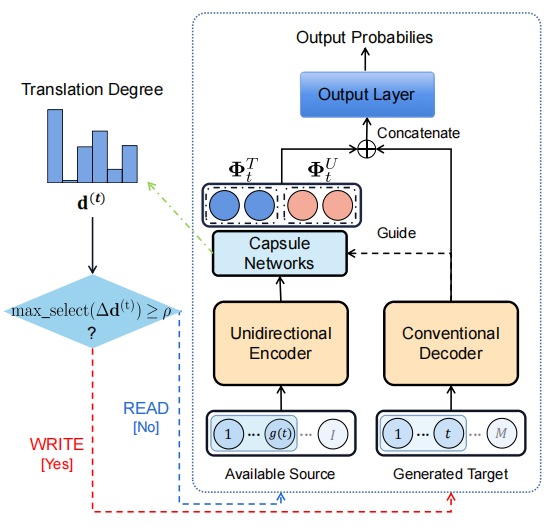

3. Information-Transport-based Policy for Simultaneous Translation (Shaolei Zhang, Yang Feng).

Accepted by Main Conference.

Abstract: Simultaneous translation (ST) outputs translation while receiving the source inputs, and hence requires a policy to determine whether to translate a target token or wait for the next source token. The major challenge of ST is that each target token can only be translated based on the current received source tokens, where the received source information will directly affect the translation quality. So naturally, how much source information is received for the translation of the current target token is supposed to be the pivotal evidence for the ST policy to decide between translating and waiting. In this paper, we treat the translation as information transport from source to target and accordingly propose an Information-Transport-based Simultaneous Translation (ITST). ITST quantifies the transported information weight from each source token to the current target token, and then decides whether to translate the target token according to its accumulated received information. Experiments on both text-to-text ST and speech-to-text ST (a.k.a., streaming speech translation) tasks show that ITST outperforms strong baselines and achieves state-of-the-art performance.

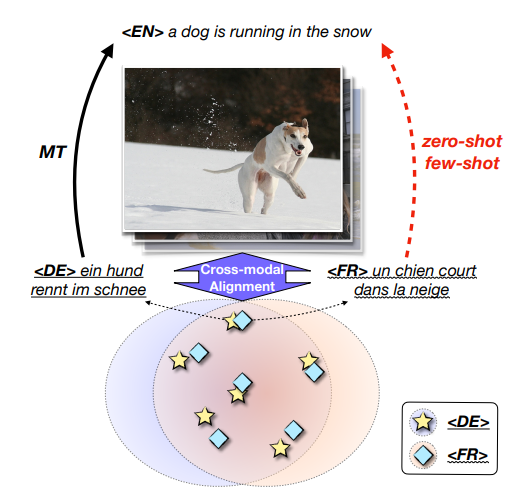

4. Low-resource Neural Machine Translation with Cross-modal Alignment (Zhe Yang, Qingkai Fang, Yang Feng).

Accepted by Main Conference.

Abstract: For low-resource neural machine translation, existing techniques often rely on large-scale monolingual corpora, which is impractical for some low-resource languages. In this paper, we turn to connect several low-resource languages to a particular high-resource one by additional visual modality. Specifically, we propose a cross-modal contrastive learning method to learn a shared space for all languages, where both a coarsegrained sentence-level objective and a finegrained token-level one are introduced. Experimental results and further analysis show that our method can effectively learn the crossmodal and cross-lingual alignment with a small amount of image-text pairs and achieves significant improvements over the text-only baseline under both zero-shot and few-shot scenarios.

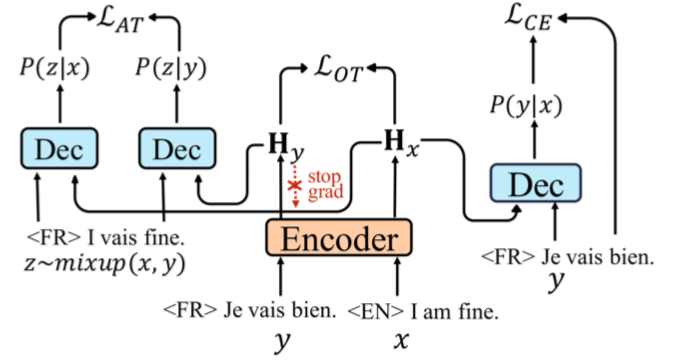

5. Improving Zero-Shot Multilingual Translation with Universal Representations and Cross-Mappings (Shuhao Gu, Yang Feng).

Accepted by Findings of EMNLP.

Abstract: The many-to-many multilingual neural machine translation can translate between language pairs unseen during training, i.e., zero-shot translation. Improving zero-shot translation requires the model to learn universal representations and cross-mapping relationships to transfer the knowledge learned on the supervised directions to the zero-shot directions. In this work, we propose the state mover’s distance based on the optimal theory to model the difference of the representations output by the encoder. Then, we bridge the gap between the semantic-equivalent representations of different languages at the token level by minimizing the proposed distance to learn universal representations. Besides, we propose an agreement-based training scheme, which can help the model make consistent predictions based on the semantic-equivalent sentences to learn universal cross-mapping relationships for all translation directions. The experimental results on diverse multilingual datasets show that our method can improve consistently com- pared with the baseline system and other contrast methods. The analysis proves that our method can better align the semantic space and improve the prediction consistency.

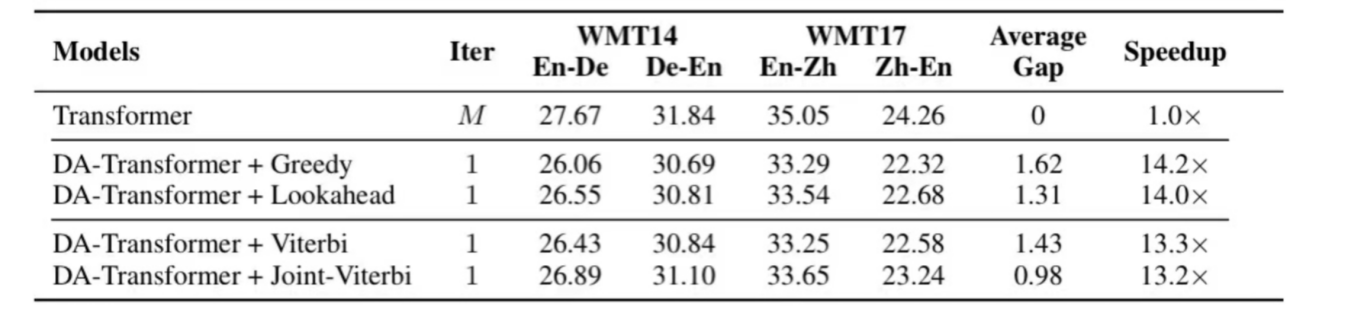

6. Viterbi Decoding of Directed Acyclic Transformer for Non-Autoregressive Machine Translation (Chenze Shao, Zhengrui Ma, Yang Feng).

Accepted by Findings of EMNLP.

Abstract: Non-autoregressive models achieve significant decoding speedup in neural machine translation but lack the ability to capture sequential dependency. Directed Acyclic Transformer (DA-Transformer) was recently proposed to model sequential dependency with a directed acyclic graph. Consequently, it has to apply a sequential decision process at inference time, which harms the global translation accuracy. In this paper, we present a Viterbi decoding framework for DA-Transformer, which guarantees to find the joint optimal solution for the translation and decoding path under any length constraint. Experimental results demonstrate that our approach consistently improves the performance of DA-Transformer while maintaining a similar decoding speedup.

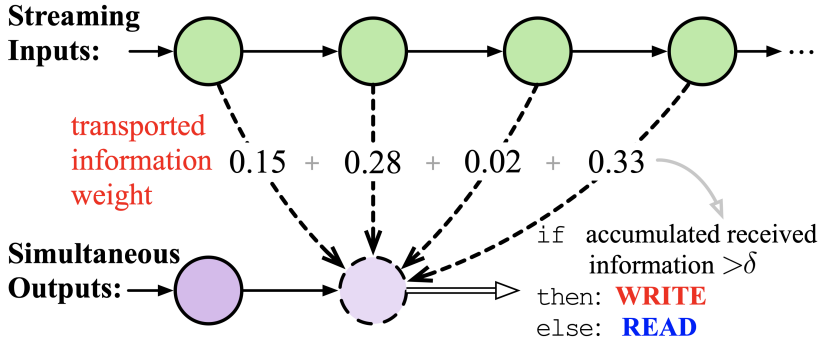

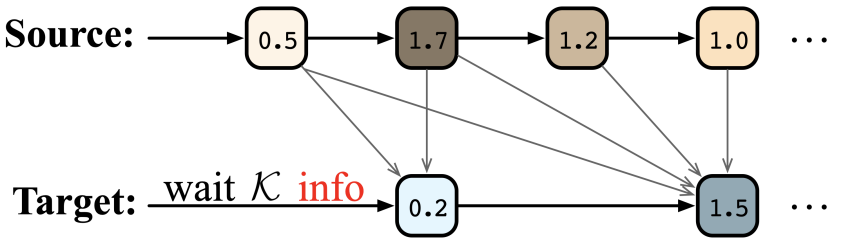

7. Wait-info Policy: Balancing Source and Target at Information Level for Simultaneous Machine Translation (Shaolei Zhang, Shoutao Guo, Yang Feng).

Accepted by Findings of EMNLP.

Abstract: Simultaneous machine translation (SiMT) outputs the translation while receiving the source inputs, and hence needs to balance the received source information and translated target information to make a reasonable decision between waiting for inputs or outputting translation. Previous methods always balance source and target information at the token level, either directly waiting for a fixed number of tokens or adjusting the waiting based on the current token. In this paper, we propose a Wait-info Policy to balance source and target at the information level. We first quantify the amount of information contained in each token, named info. Then during simultaneous translation, the decision of waiting or outputting is made based on the comparison results between the total info of previous target outputs and received source inputs. Experiments show that our method outperforms strong baselines under and achieves better balance via the proposed info.

8. Turning Fixed to Adaptive: Integrating Post-Evaluation into Simultaneous Machine Translation (Shoutao Guo, Shaolei Zhang, Yang Feng).

Accepted by Findings of EMNLP.

Abstract: Simultaneous machine translation starts translation before the whole source sentence is read, which adopts fixed policy or adaptive policy to obtain better trade-offs between latency and translation quality. The previous methods rely too much on the decision module of the READ/WRITE action, which writes the generated token immediately after deciding on WRITE action, but this will inevitably lead to incorrect actions. This paper introduces rationality evaluation into the READ/WRITE policy, which uses the change of source information to evaluate the rationality of the READ/WRITE action before executing the decided action, and then executes the corresponding action accordingly. Therefore, it can reduce unreasonable operations and obtaining a better balance between translation quality and latency.